TECHNICAL BRIEF NO. 16 2007

PDF version

The Campbell Collaboration: Systematic Reviews and Implications for Evidence-Based Practice

Herbert M. Turner, III, PhD, University of Pennsylvania,

Editor, C2 Education Coordinating Group

Chad Nye, PhD,

University of Central Florida,

Editor, C2 Education Coordinating Group

The National Center for the Dissemination of Disability Research (NCDDR) is pleased to bring you this issue of Focus highlighting current work of the Campbell Collaboration (C2). The new scope of work of the NCDDR includes several activities that involve C2 and its development of evidence-based resources such as systematic reviews of research evidence. Currently, C2 focuses on three substantive research areas: criminal justice, social welfare, and education. The NCDDR plans to explore with C2 ways to establish a coordinating group or subgroup that can focus on developing systematic reviews of evidence pertaining to disability research, including high-quality research supported through funding from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR). In this issue of Focus, two editors of the C2 Education Coordinating Group provide some general information about C2.

About the Campbell Collaboration

The Campbell Collaboration (C2) is an international volunteer network of policymakers, researchers, practitioners, and consumers who prepare, maintain, and disseminate systematic reviews of studies of interventions in the social and behavioral sciences (see https://www.campbellcollaboration.org). The organization is named after Donald T. Campbell, the American social scientist and champion of public and professional decision making based on sound evidence. C2 reviews are designed to generate high-quality evidence in the interest of providing useful information to policymakers, practitioners, and the public regarding what interventions help, harm, or have no detectable effect.

Established in 2000, C2 is a sibling organization of and patterns itself after the international Cochrane Collaboration. Named for British epidemiologist Archie Cochrane, the Cochrane Collaboration was established in 1993. It has produced nearly 3,000 systematic reviews of studies of health-related interventions and has developed uniform standards for the retrieval, evaluation, synthesis, and interpretation of the reviewed studies. C2 builds on the Cochrane Collaboration's experience. Both organizations cooperate to understand how to produce high-quality reviews, based on high standards of evidence, to serve the public interest. In referring to the progress the Cochrane Collaboration made in the 1990s in developing a transparent process for systematically reviewing the evidence on what works (or what doesn't) in medicine, the president of the Royal Statistical Society, Adrian Smith, wrote the following in his presidential address:

But what's so special about medicine? We are, through the media, as ordinary citizens, confronted daily with controversy and debate across a whole spectrum of public policy issues. But typically, we have no access to any form of systematic "evidence base"—and therefore no means of participating in the debate in a mature and informed manner. Obvious topical examples include education— what does work in the classroom?—and penal policy— what is effective in preventing re-offending? (Smith, 1996, as cited in Chalmers, 2003, p. 34)

Formation of C2 was first explored at meetings convened in London in July 1999 and in Stockholm in December of the same year. This led to an inaugural meeting in 2000 in Philadelphia, in which C2's mission was tentatively established. The consensus among the 100 people from 15 countries attending the inaugural meeting was that C2's mission carried powerful, international, and cross-disciplinary appeal. Subsequent annual meetings were held in Philadelphia, Stockholm, Washington, Lisbon, and Los Angeles. As it approaches its 7th anniversary, C2 holds fast to its mission to prepare, maintain, and disseminate systematic reviews with the expectation of delivering more holistic answers to issues raised by policymakers, practitioners, and the public.

A holistic understanding of evidence is especially important in supporting learned decision making in the social, behavioral, and education sciences. A systematic review can be defined as "the application of procedures that limit bias in the assembly, critical appraisal, and synthesis of all relevant studies on a particular topic. Meta-analysis may be, but is not necessarily part of this process" (Last, 2001, as cited in Chalmers, Hedges, & Cooper, 2002, p. 17). A meta-analysis can be defined as "the statistical synthesis of the data from separate but comparable studies, leading to a quantitative summary of the pooled results" (Last, 2001, as cited in Chalmers, Hedges, & Cooper, 2002, p. 17).

Organizational Structure

An international steering group with two co-chairs is responsible for setting policy. The Secretariat, which is the collaborating operation center, supports all of the activity. Three substantive coordinating groups—Crime and Justice, Social Welfare, and Education—currently orchestrate work on systematic reviews. A Methods group attends to crosscutting issues in statistics, quasi-experimental design, information retrieval, and process and implementation regarding randomized and quasi-experiments. A Users group coordinates the collaboration's relationship with partner organizations, such as the University of London's EPPI-Centre (Evidence for Policy and Practice Information and Co-ordinating), that have related missions, end-user networks, Web sites, and initiatives. This is to ensure that information is accessible to people and intermediary organizations.

Specialized C2 centers such as NC2, the Nordic Campbell Center, are evolving to serve training, production, and communication needs, particularly to geographic areas outside of the United States. Finally, the recent affiliation with the American Institutes for Research (AIR), a premier policy research organization that conducts high-quality research across a broad spectrum of fields including health care, has strengthened the C2 administrative infrastructure to increase the consistent production of high-quality systematic reviews.

Free Web-Accessible Registers

The C2 library houses C2's core products. The library is accessible through the C2 Web site, free of charge, and contains all registers; information on all procedures, guidelines, and standards used in reviews; and information on collaborators that are conducting reviews. The registers are briefly described below, followed by a short description of the process for systematic review production and an example of a systematic review, which is the collaboration's most important product. Many of C2's products are accessible through the organization's Web site at https://www.campbellcollaboration.org and are updated as often as resources permit.

C2-SPECTR. The C2 Social, Psychological, Educational, and Criminological Trials Register (C2-SPECTR) is a core product. C2-SPECTR contains over 14,000 citations to reports of randomized experiments or possibly randomized experiments. These reports can be, in part, ingredients for the collaboration's systematic reviews and for systematic reviews by others. Many of the citations to reports are also accompanied by brief abstracts, although the abstracting of reports is not uniform in content because they come from a variety of sources. Resources to develop uniform and informative abstracts are being sought to increase the number of cataloged citations and to improve the presentation format.

C2-PROT. The Prospective Trials Register aids in the maintenance of systematic reviews by providing reviewers with access to a searchable registry of "prospective" trials. C2-PROT stores all available information on trials that are currently being conducted or will be conducted in the near future. When available, contact information is provided, which allows the reviewer to closely monitor and track the progress of studies as they affect C2 reviews. C2-PROT, thus, has the potential to be an efficient, time-saving tool for systematic reviewers. C2-PROT is helpful not only to C2 reviewers but also to anyone who is interested in the direction of future research or who wants to be informed about newly initiated trials with the ability to monitor progress. Though not as well developed as C2- SPECTR, C2-PROT has added over 300 trials since 2003.

C2-RIPE. The C2 Register of Interventions and Policy Evaluation houses approved titles, protocols, reviews, and abstracts. In addition, it contains refereed comments and critiques as they are submitted for specific reviews. As these documents are approved within the four C2 coordinating groups, they are published in the C2-RIPE database. Titles must be approved first (for a list of registered systematic review titles, see "Selected List of Reviews by Coordinating Groups"). Registered titles with an approved protocol, review, abstract, or refereed comment will have a "View Documents" hypertext link in the database.

Systematic Reviews

Producing systematic reviews of reports that are as bias-free as possible by screening, coding, interpreting, and summarizing such reports is a long process and requires resources. Consequently, production of reviews has been slower than anticipated but is beginning to gather momentum as a result of affiliations with organizations such as the AIR and an increased visibility in academia, government, professional associations, and the public. To date, 17 reviews have been published, under the auspices of the relevant coordinating group, in the C2 library.

Authors of these reviews have followed the eight steps of a C2 review. Adherence to these steps is verified by the collaboration's rigorous peer review process, which requires peer review at the protocol (or research plan) stage and review stage by two substantive experts and one methodological expert. To illustrate this process, we provide an example of a C2 systematic review on parent involvement, which empirically assessed the effect of parent involvement on elementary school children's academic achievement (Nye, Turner, & Schwartz, 2006).

An Example of a C2 Systematic Review on Parent Involvement

Problem Formulation and Inclusion Criteria. The review addressed the question of "What is the effect of parent involvement on the academic achievement of elementary school children?" Parent attendance at PTA meetings did not meet our definition of parent involvement, but assistance with homework did. Based on our narrative review of parental involvement literature, we decided a priori to include only those studies in which parents routinely provided systematic education enrichment activities outside of formal schooling; measured academic achievement using a standardized or criterion reference measure; and used random assignment to create at least a treatment group and a control group.

Locating and Retrieving Studies. We searched 20 electronic databases to locate studies that met the eligibility criteria. Many of these are found in the C2 Information Retrieval Policy Brief, which provides a list of international databases appropriate to C2 coordinating group interests. Some databases are well known, such as ERIC and PsycINFO, whereas others are not, such as the System for Information on Grey Literature in Europe (SIGLE) or the Chinese ERIC database. We e-mailed over 1,500 policymakers, researchers, practitioners, and consumers to request referrals to studies or to people who know of studies relevant to our review and its inclusion criteria. Over 100 people responded. Through the database search and the e-mail requests we retrieved the full texts for 100 studies, of which 20 met our inclusion criteria.

Extracting Data From Studies. We independently coded each of the 20 studies for characteristics such as design methods used (e.g., multigroup comparison with random assignment and no attrition), intervention characteristics (e.g., parent as reading tutor for 10 weeks), outcome measures (e.g., standardized reading achievement), and target population (e.g., fifth graders). We also coded the following information, if it was available, for effect size calculations: means, standard deviations, F-values, t-values, p-values, and sample sizes for both the parent involvement and control groups on relevant outcome measures. Two coders (and in rare cases three coders) reconciled coding discrepancies.

Computing Effect Sizes. We then computed an effect size for each study. We took the difference between the mean on an outcome measure (such as standardized reading achievement for the parent involvement group) and the mean on the same outcome measure for the control group and then divided that difference by the pooled standard deviation of both groups. For each study with multiple outcome measures that were conceptually similar (such as reading achievement and overall reading), we averaged computed effect sizes across outcomes to produce one effect size per study. To assess the precision of each effect size estimate, we computed the standard error and associated lower and upper limits of the 95% confidence interval. For more information on computing effect sizes, see the Winter/Spring 2004 issue of The Evaluation Exchange (vol. 10, number 4).

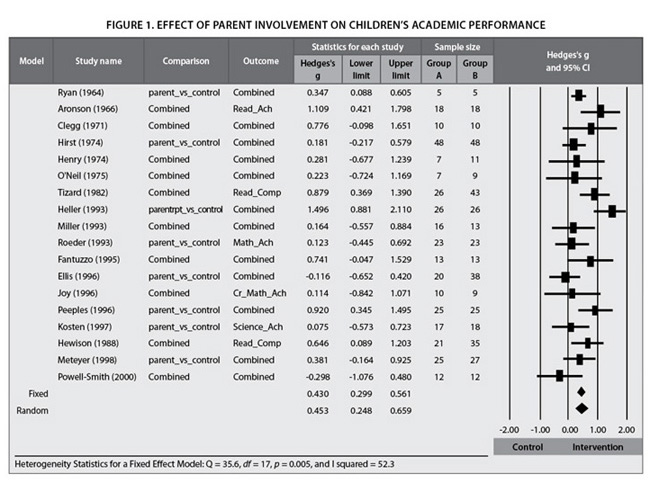

Interpreting Results Using a Forest Plot. The graphical display of effect sizes for individual studies and an overall effect size is called a "forest plot" because of its potential to allow the analyst to "see the forest for the trees." The forest plot of effect sizes for a subset of 18 studies from our review is shown in Figure 1. For Study 1 (Ryan, 1964), the effect size of parent involvement for the reading achievement outcome is d = .35. In other words, children in the parent involvement group scored over one third of a standard deviation higher on reading achievement than the average for children in the control group. The whiskers that extend from d = .35 are the confidence interval for that effect size. Staying with Study 1, we see that at 95% confidence, it is highly improbable that the population effect size that we are estimating is zero because the value of d ranges from .09 to .61.

[Text table version of Figure 1 ]

Interpreting Results Using Bare-Bones Meta-Analysis. The forest plot in Figure 1 clearly illustrates how the magnitude of effect size, confidence interval, and p-values vary across studies. This variation is one reason narrative reviews require reviewers to reconcile what often appears to be contradictory results. However, the last effect size of d = .45 in the bottom row of the forest plot quantitatively reconciles what appears to be contradictory results. This d value is the combined weighted standardized mean difference—under a random effects model—for all parent involvement effect sizes presented in the forest plot. (In a random effects model, we assume that the effect size in the population varies from study to study.) This d value is the product of a bare-bones meta-analysis, which looks at effect sizes without additional analysis such as moderator analysis. Based on it, we conclude that children in the parent involvement group scored approximately two fifths of a standard deviation above the average for children in the control group. Extension of the bare-bones meta-analysis could include a moderator, subgroup, or meta-regression analysis, which is beyond the scope of this article. For a more in-depth treatment of meta-analysis, see Lipsey and Wilson (2001), Hunter and Schmidt (2004), and Cooper (1998).

The Potential Contributions of C2 and Systematic Reviews

The narrative literature review of single studies on parental involvement revealed conflicting results of effects on academic achievement—some studies show positive effects, others showed negative effects, still others showed no effects. These conflicting results, and difficulties in reconciling them through a narrative review, can be traced to reviewers' traditional reliance on assessing the effectiveness of individual studies based on statistical significance alone or vote counting. Lack of statistical significance can be due to a number of factors, including small sample sizes and poor study design. A C2 systematic review that includes a meta-analysis empowers the reviewer to account for methodological quality of studies and to combine those studies that individually possess insufficient statistical power to detect the effect of parent involvement on students' academic achievement but possess sufficient power to detect effects, if they exist, when aggregated across studies. By computing an overall d index that weights each study by sample size, what appears to be conflicting study results can be quantitatively reconciled to determine whether the parent involvement intervention helps, harms, or has no detectable effect.

Implications of C2 Systematic Reviews

What do we do with the results of a systematic review? This is the one topic that is often the focus of discussion and even heated debate. Few would argue with the premise that a systematic review is methodologically, statistically, and conceptually sound, but critics of systematic reviews have questioned whether a systematic review can help us identify interventions that can, for example, improve academic achievement of children, employment outcomes for people with disabilities, or housing opportunities for inner-city families. The overarching response to this line of questioning reminds us of the old saying, "If you continue to do what you've always done, you will continue to get what you've got!" To continue to conduct primary intervention research without ever taking stock of the existing state of knowledge in a domain certainly encourages us to advance down the road of "…continuing to do what we've always done" (Hunt, 1997).

C2 encourages the production of systematic reviews through an organized, systematic, transparent, and methodologically sound system of data collection, extraction, analysis, and interpretation that can guide the practitioner in the day-to-day delivery of services and at the same time inform the research community of the state of knowledge in a discipline and suggest areas of need for further research. In addition, policymakers (e.g., legislators) and decision makers (e.g., agency managers) can use the systematic review and meta-analysis to guide them in the adoption and implementation of programs that have demonstrated effectiveness to meet the needs of the community being served.

For C2 to reach its potential there is still much work to be done. An immediate priority is to develop the organization's administrative infrastructure to increase the efficient and consistent production of systematic reviews that meet C2's standards and provide a practical guide to the educational, social welfare, and criminal justice professional.

Selected C2 Resources

To stay current on C2 activities, sign up to receive the electronic newsletter, C2 Quarterly, and other announcements through the C2 Web site: https://www.campbellcollaboration.org/newsletters/index.php

- The Campbell Collaboration Library

https://www.campbellcollaboration.org/campbell_library/index.php - C2 Coordinating Groups

https://www.campbellcollaboration.org/coordinating_groups/index.php - C2 Information Methods Policy Briefs

https://www.campbellcollaboration.org/resources/research/Methods_Policy_Briefs.php - Guidelines for Systematic Review Authors and Reviewers

https://www.campbellcollaboration.org/resources/guidelines.shtml - C2-SPECTR: Social, Psychological, Educational, and Criminological Trials Register

https://www.campbellcollaboration.org/spectr.asp (inactive URI 9/1/2008) - C2-PROT: Prospective Trials Register

https://www.campbellcollaboration.org/prot.asp (inactive URI 9/1/2008) - C2-RIPE: Register of Interventions and Policy Evaluation

https://www.campbellcollaboration.org/frontend.asp#About%20C2Ripe.asp (inactive URI 9/1/2008)

References

Chalmers, I. (2003). Trying to do more good than harm in policy and practice: The role of rigorous, transparent, up-to-date evaluations. The ANNALS of the American Academy of Political and Social Science, 589(1), 22-40.

Chalmers, I., Hedges, L. V., & Cooper, H. (2002). A brief history of research synthesis. Evaluation and the Health Professions, 25(1), 12-37.

Cooper, H. (1998). Synthesizing research. Thousand Oaks, CA: Sage.

Hunt, M. (1997). How science takes stock: The story of meta-analysis. New York: Russell Sage Foundation Publications.

Hunter, J. E., & Schmidt, F. L. (2004). Methods of meta-analysis: Correcting error and bias in research findings (2nd ed.). Thousand Oaks, CA: Sage.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks, CA: Sage.

Nye, C., Turner, H. M., & Schwartz, J. B. (2006). Approaches to parental involvement for improving the academic performance of elementary school children in grades K-6. Retrieved November 7, 2006, from http://db.c2admin.org/doc-pdf/Nye_PI_Review.pdf (inactive URI 7/2009)

Smith, A. (1996). Mad cows and ecstasy. Journal of the Royal Statistical Society, 159(3), 367-383.

Turner, H. M., Nye, C., & Schwartz, J. B. (2004). Assessing the effects of parent involvement interventions on elementary school student achievement. The Evaluation Exchange, 10(4), 4. Retrieved September 9, 2008, from http://www.hfrp.org/evaluation/the-evaluation-exchange/issue-archive/evaluating-family-involvement-programs/assessing-the-effects-of-parent-involvement-interventions-on-elementary-school-student-achievement

View FOCUS Technical Brief Archived Issues

Last Updated: Thursday, 06 February 2025 at 02:44 PM CST